Member-only story

How to locally host DeepSeek (or any other LLM) and use it for automated code actions

Introduction

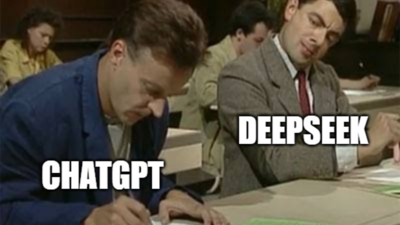

As many have seen, DeepSeek has become a very hot item. This also sparks the discussion again about calling LLM providers, like OpenAI and DeepSeek, directly. When using these providers, data is being sent to the providers which could potentially be stored which is many cases not what you want.

Furthermore, it is nice to experiment locally without any constraints on API calls or costs involved.

Therefore I have created this article to help out anyone who wants to work with local models. As a readers note: I have decided to keep this article to the point of running everything and less about the inner workings or further choices / optimizations.

Requirements

LLM’s like DeepSeek require quite a lot of storage and memory to run.

Fortunately, I have an Apple MacBook Pro Max (Mx architecture) with the current specs:

- Apple M2 Max

- 64gb memory

- 2tb storage

I am running the latest Apple Mac OS (Sequoia, version 15.3).

To be able to run LLM models locally, I found that it is the easiest to use helper applications to host them. I tried the following two applications: